Quickstart

1. Preparation

Prepare the required versions of Python and NodeJS.

- Install Python: Python == 3.10 recommended.

- Install NodeJS: NodeJS >= v16 recommended.

You can also use tools like conda to install the python environment.

2. Build & Start

2.1. Clone Code

2.2. Build Chat2Graph

2.3. System Configuration

Prepare .env file based on .env.template.

Configure environment variables (e.g., LLM parameters), model DeepSeek-V3 is recommended (see Configure .env).

2.4. Start Chat2Graph

The following log indicates that the Chat2Graph server has been started.

3. Use Chat2Graph

You can access Chat2Graph in the browser at http://localhost:5010/. For detailed usage, refer to the「Cookbook」。

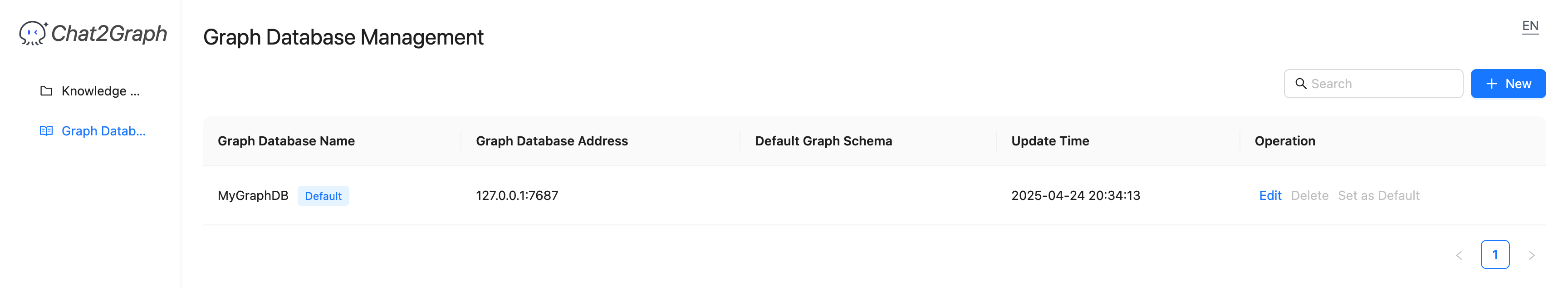

3.1. Register Graph Database

Register a graph database instance in advance to experience the full "Chat to Graph" functionality of Chat2Graph. Currently supported databases include Neo4j and TuGraph.

For details, refer to the Graph Database documentation.

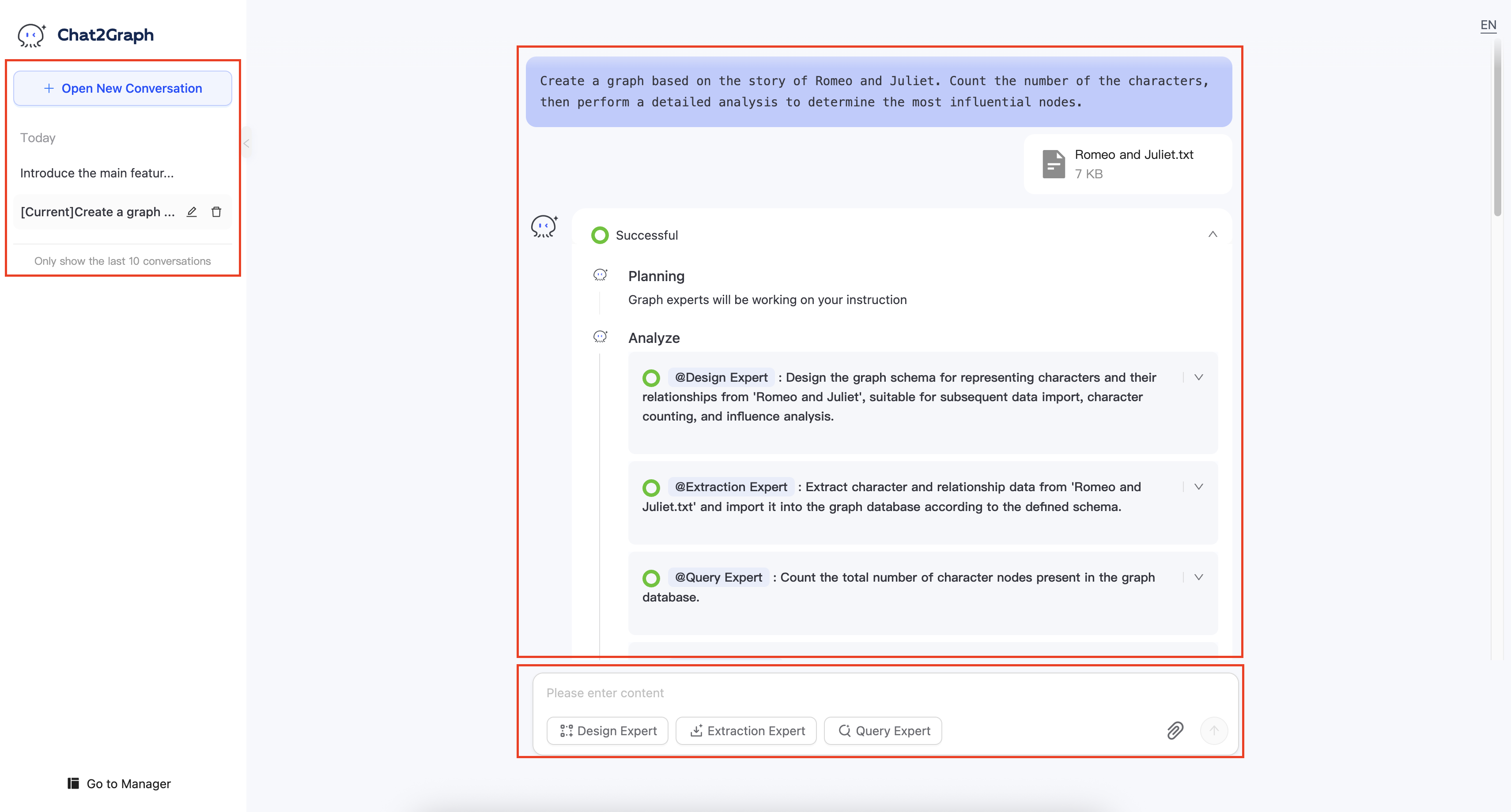

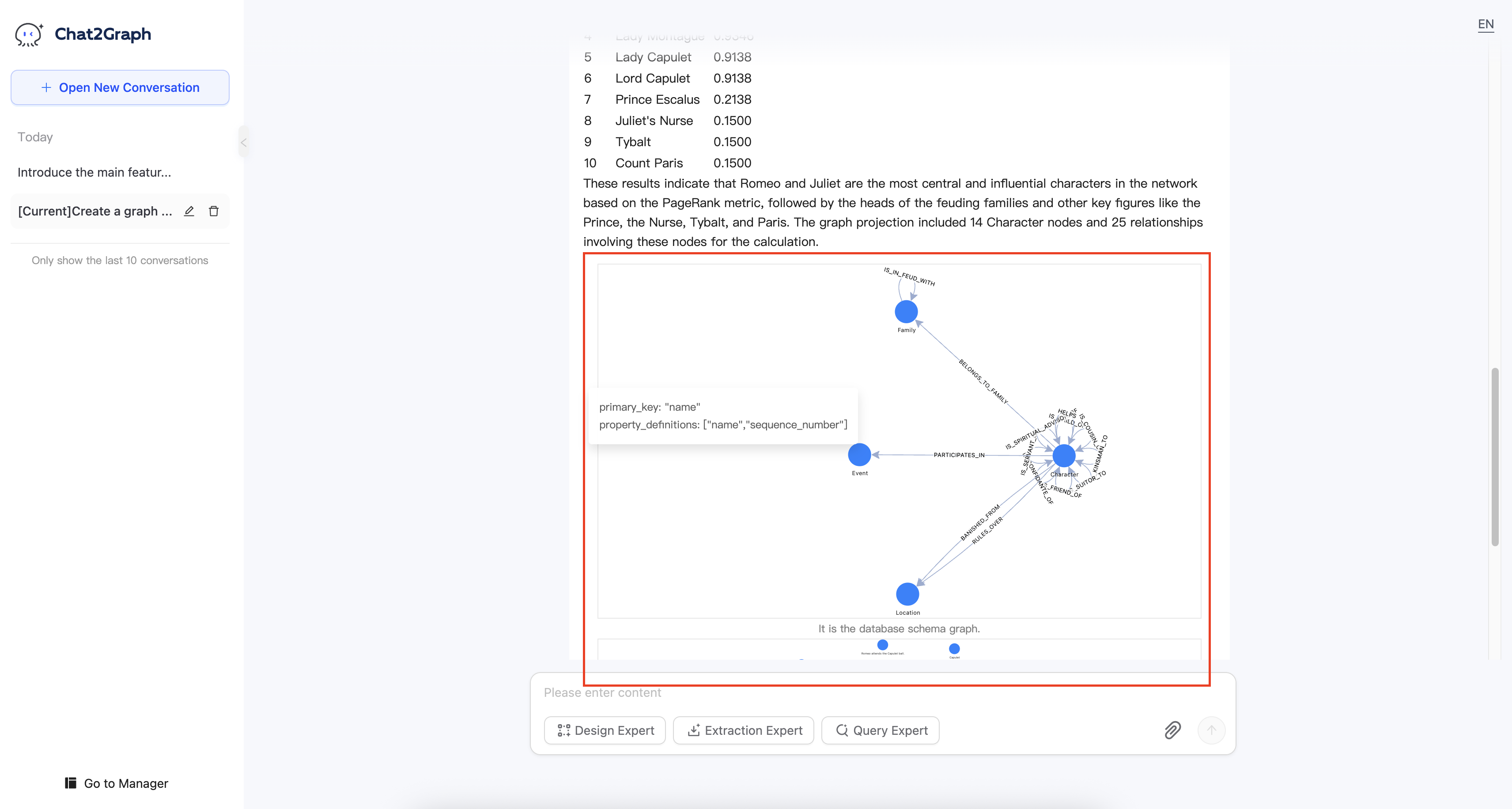

3.2. Chat to Graph

Automatically complete knowledge graph construction and analysis tasks.

Supports real-time rendering of graph models and data.

4. Integrate Chat2Graph

Chat2Graph provides a clean and simple SDK API, allowing you to easily customize the agent system. For details, refer to the「SDK Reference」。

4.1. Configure LLM Parameters

4.2. Initialize AgenticService

Customize chat2graph.yml file to initialize AgenticService with one click.